Let’s get this out of the way as soon as possible: different methodologies have different software testing protocols and frameworks. That doesn’t mean that we can’t talk about software testing as a whole, it just means that we have to be careful to include what are arguably the most important aspects of it and talk about them to make sure that no one misses those.

First, let’s list those “most important” aspects of Software Testing. Again, different companies might seek different things from testing, but I consider these to be the basics:

- What needs to be tested? You need to find and define which systems depend on your team, and test those. You might not be able to control some outer aspects of software, but you can make sure that your part works.

- What is it supposed to do? Focus on your project’s requirements. Which characteristics and functionality is most important in your software? Once you answer that question, be sure to test them all.

- Nice to haves: Just because something works, it doesn’t mean that it can’t be better. We all know the classic “if it ain’t broke, don’t fix it”, but remember, we’re not trying to fix what already works; we’re trying to improve on what’s already a good product. Non-functional requirements are sometimes head to head in importance with the actual functional requirements.

Some people might think that the third and final point might not be necessary, but believe me when I say, sometimes the only differences between your product and the competition are the nice to haves, and that’s a war you don’t want to lose.

It’s important to know what’s going to be tested before actually testing your software. If there’s no strategy, not only is your software quality going to suffer, but your team as well. A bunch of people with no idea of what they’re supposed to be doing or how they’re supposed to be doing it is a perfect recipe for chaos.

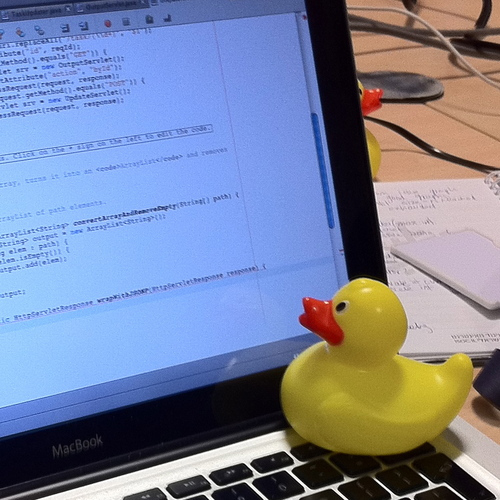

Once a plan has been defined, there needs to be a test design. There’s a set of test scenarios that are necessary to validate your system. Some might be as trivial as making sure that the date is being displayed correctly, and others might test complex communication between different modules in your system. It doesn’t matter how big or small each particular test might be, if it’s needed for a correct user experience, it needs to be passed. These core tests are gonna help us discover important errors in our program, not superficial defects.

Then, we execute our tests. It’s common good practice to test small, basic functionality first, and finish off with the more complex tests. This way, if a basic part is malfunctioning, you’ll discover it before having to run a large test.

Last, but not least: results are gathered, analyzed, and evaluated to assess if the code is good enough for production, or if it needs some fine-tuning. Companies usually have a default percentage of tests that need to be passed in order for a piece of code to be considered “good enough” for production, and while those percentages might not be the same everywhere, they usually fall around the 90% mark. However, if any of the 10% of tests failed represents a critical malfunction in our code, it needs to be fixed before it ever sees the light.

That’s a general guideline of how testing should be done, but feel free to tweak it to your necessity. Again, you might need different things so you’ll probably end up doing things differently, but feel free to start off with the base described above.

Now, let’s talk about the actual tests. There’s a level system that makes it easy to categorize each test so that you know what it is that you’re actually testing.

- Unit tests: these are your component by component tests. They test the core of the functionality of your program by making sure that all components work individually before even thinking about communication between them. This helps us know if our software does what it needs to do at a basic level.

- Integration tests: once the core functionality is there, you need to test how they work with each other. You test communication between components and find errors in the communication interfaces so that you can fix them ASAP.

- System tests: Once communication between components has been tested, we test the system as a whole. You communicate components in a way that it simulates real use and verify that every requirement is being met and is up to the established quality standards.

- Acceptance tests: The final boss. You need to beat this to determine if your software is ready to see the light or not. Requirements are ever-changing, so you need to constantly validate if your software meets them thoroughly. If it does, great, it goes straight to production. If it doesn’t, you’ll have to work a bit harder before you can release it.

That’s it. Four levels. If this was a platforming game, that might be a piece of cake, but since we’re talking about software quality, this is harder than it looks and sounds. At least it will make sure that your code doesn’t hurt anybody. That’s a plus, right?

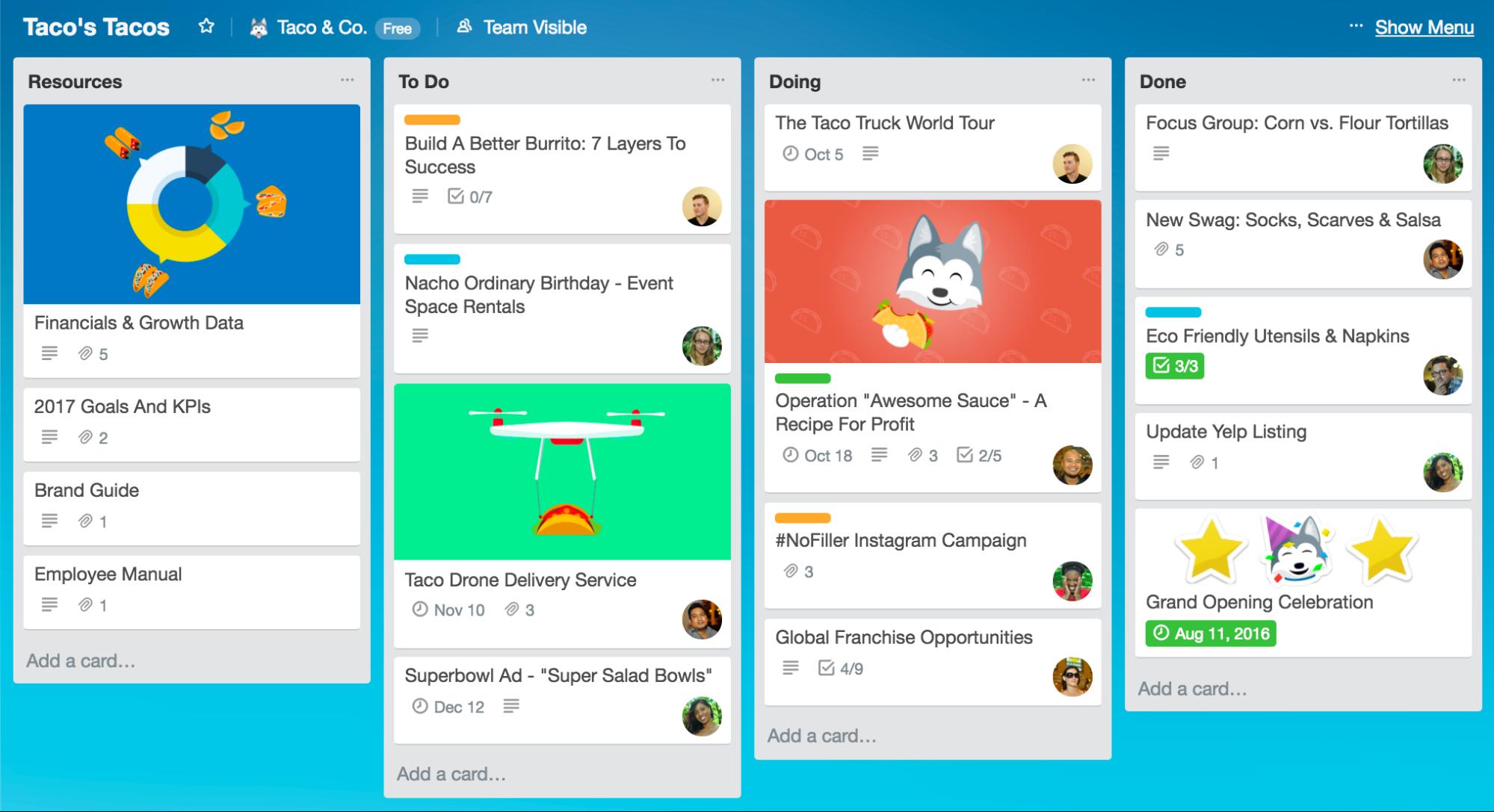

Different people inside the testing process execute different roles. There’s test leaders, who deal with the administrative side of testing (planning the tests, defining objectives and goals, etc.), and then there’s the actual testers, who gather the requirements provided by the leader and configure a proper testing environment to run the tests they developed based on the requirements given.

Testing environments are often controlled machines that are guaranteed to work and always execute in the same conditions. All supported applications, modules, and components are available in this testing environment, as well as a network connection if needed. Additionally, they often have specialized execution logging tools that make it easier to report bugs, and it’s in charge of generating test data that’ll be later analyzed to improve the program.

Testing can become difficult if you don’t know what you expect to get out of the tests you’re running. That’s why test case design techniques exist. You can test for both positive and negative input values that are always supposed to yield the same results. You could have a combination of positive and negative inputs, along with the different permutations to assess how the program handles mixed inputs. Finally, if you have people that analyze the data gathered from previous testing rounds, your team could predict future failures and test certain components more thoroughly to make sure that nothing breaks unexpectedly.

If a defect was to be found, it needs to be categorized, assigned, fixed, and verified by QA. If a defect is fixed properly, the transaction is then closed and results are reported to prevent it from happening again.