What?

Version control systems are a category of tools of software that help a software team to manage changes in the source code through time. The version control tool tracks all of the changes and modifications that the code suffers inside a special type of database. If a developer makes a mistake inside the code one can easily go back to an earlier version of the code where the changes that broke the code didn’t exists yet. You can compare the code that existed before and more easily find where the error might be located.

For almost every software project, the source code is like the jewel of a crown. They are very precious and valuable. The source code is an invaluable source of knowledge and understanding of the specific domain that the developers have studied and perfected. Version control tools ensures the integrity of the source code against human errors and accidental mistakes.

Furthermore, the source code is alive, always changing and being edited continuously by numerous members of a team. Version control tools help resolve conflicts between all of these changes. Some changes that a developer might be working may be incompatible with the changes being made by another developer. This situation should be detected and resolved without blocking other team members.

Advantages of version control systems.

- The first obvious advantage of using a vcs is a complete history of all the changes throughout time of all the files inside the project.

- Branches and pull requests. One can create a branch and create and modify code without blocking other team members. If any error occurs one can easily rollback to an earlier version of the code

- Tracking power. One can see who is responsible for the changes made inside a file. This is sometimes used to blame someone that broke the code.

Git

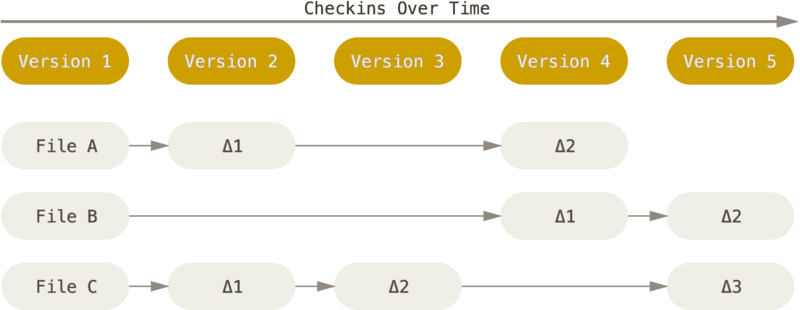

Git is probably the most common used vcs. The main difference between Git and any other VCS is the way that data is handled. Most of VCS store information as a list of file changes.

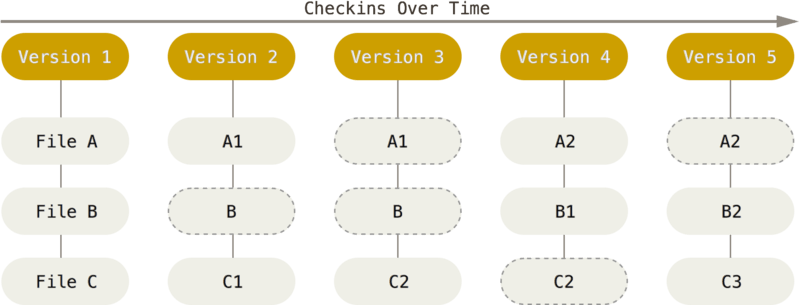

Git does no store information in this manner. Git stores a set of copies in a system of miniature files. Everytime you add a change or store the state of the git project, git takes a snapshot of how the files look in that point in time. And stores a reference for that snapshot. So git stores its data as a sequence of snapshots

Bibliography

https://www.atlassian.com/es/git/tutorials/what-is-version-control