Software process improvement methodology is defined as definitions of sequence of tasks, tools and techniques which can be used to improve the process of creating software. There are a lot of models for software process improvement, but the better known ones will be shown in this entry along with its explanation and characteristics.

CMMI

If you want to start developing software for the US government, following the CMMI standard is actually required by rule. In general, this model will be of great use if you want to have an enterprise that develops software in the United States because it is very well known in the country.

Capability Maturity Model Integration (or CMMI in short) is a model administrated by the CMMI institute. This model is followed mainly by large companies that offer quality and business level products, which is why the government often requires this process of improvement in their contracts to get new software. CMMI’s main focus lays on improving risk management.

How it operates:

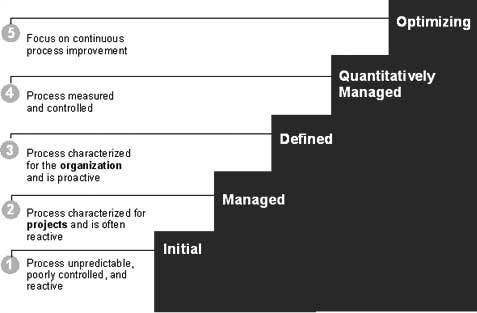

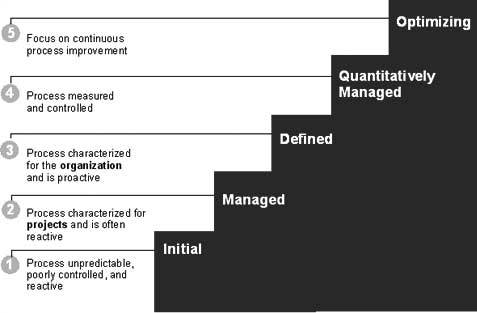

Capability Maturity Model Integration revolves around something called maturity levels, which are measured taking in account different aspects of whatever enterprise is being analyzed. The levels range from 1 to 5 and represent how well the company is managing its development process and how well are the personnel prepared to follow such process, as well as what must be improved to go up to the best level: level 5.

For each maturity level, there is a specific yet generic goal to be achieved in order to level up, which we can see in the picture above.

For more information in the meaning of each level and improvement steps, you can visit this page.

TSP/PSP

Team software process (TSP) and personal software process (PSP) are process models that help developing methods improve overall, emphasizing on process methodology and product quality, just like the CMMI, but with a key difference. These two are together because they intend to reach the same type of goal and tend to measure similar things like time of delivery of advances and productivity. The difference lies in to whom it is focused. TSP is more focused for teams and groups of people (not as massive as CMMI) and PSP is focused on individuals, tending to check on discipline and skills.

ISO-15504

The international standard ISO-15504 stands for Software Process Improvement Capability Determination (also known as SPICE). This standard model evaluates the process capacity of software products, just like CMMI or TSP/PSP. Getting the certification for ISO-15504 may as useful as CMMI in United States, but for other places like Europe both have more-less equal value.

ISO-15504 revolves around 3 main focuses to improve or judge which are:

- Process evaluation

- Process improvement

- Process capacity or maturity

In order to test for these 3 main objectives, the model also classifies the state of the company or enterprise in a rank of maturity levels, as well as another chart of capacity levels.

Process capacity evaluation

This evaluation tries to rank the total capacity of a series of processes used by the evaluated company in order to make products (How good is the company’s methods on making quality products). This evaluation is ranked in 5 levels:

- Level 0 – The process is incomplete

- Not correctly implemented and does not achieve its objectives.

- Level 1 – The process works

- It is implemented and can reach its objectives.

- Level 2 – The process is managed

- The process is controlled and its implementation is planned, monitored and adjusted. The results are established, registered, controlled and mantained.

- Level 3: The process is established

- The process is documented in order to guarantee the accomplishment of objectives.

- Level 4: The proces is predictable

- The process operates accodring to defined performance targets.

- Level 5: The process is optimized

- Continously improves to help meet current and future goals.

Process maturity evaluation

This evaluation tries to rank how well-organized and effective a company is to identify, improve and innovate in order to continuously improve the quality of the products. (How good is the company itself on improving and making quality products). This evaluation is ranked in 5 levels:

Level 1: Initial

The organization does not have formal procedures for the evaluation, development and evolution of its applications. When the failure materializes, the possible fundamentals of the method are abandoned to try shortcuts in the realization and validation process. Organizational efforts then return to purely reactive engagement practices, such as “coding and testing,” which amplify the drift.

Level 2: Reproducible

The management of new projects is based on the experience stored in similar projects. The permanent commitment of human resources guarantees the durability of knowledge within the limits of its presence within the organization.

Level 3: Defined

Project management guidelines and procedures are established to enable implementation. The standard software development and evolution process is documented. It is integrated into a consistent comprehensive software engineering and project management processes. A training program has been implemented within the organization to ensure that users and IT professionals acquire the knowledge and skills necessary to take on the roles assigned to them.

Level 4: Managed

The organization establishes quantitative and qualitative objectives. Productivity and quality are evaluated. This control is based on the validation of the main milestones of the project as part of a planned program of measures.

Level 5: Optimized

Continuous process improvement is the main concern. The organization gives itself the means to identify and measure weaknesses in its processes. Seeks the most effective software engineering practices, especially those whose synergy enables continuous quality improvement

For more information about ISO-15504 you can visit here

MOPROSOFT

Inspired by ISO-15504, MOPROSOFT is actually a creation by the Mexican Software Engineering Quality Association (AMCIS)!

Differentiated from ISO-15504 and CMMI, MOPROSOFT is a model designed to consider enterprises that may not be as big as other well-known companies like Microsoft, but would like to achieve global levels of quality in their products. It takes in account the aspects and environment of small and medium companies so the requirements and evaluation level achieved can actually talk in more detail about the state of the company.

Moprosoft divides itself in 3 different evaluation levels that control different aspects of the company:

Direccion (Direction): This level focuses efforts on the company to apply strategic planning and promote an optimal operation.

Gerencia (Management): This level focuses on improving the management of processes, projects and resources.

Operacion (Operation): This level focuses on specific processes of project administration as well as development and maintenance of software.

For more information about MOPROSOFT you can follow this link!

IDEAL method:

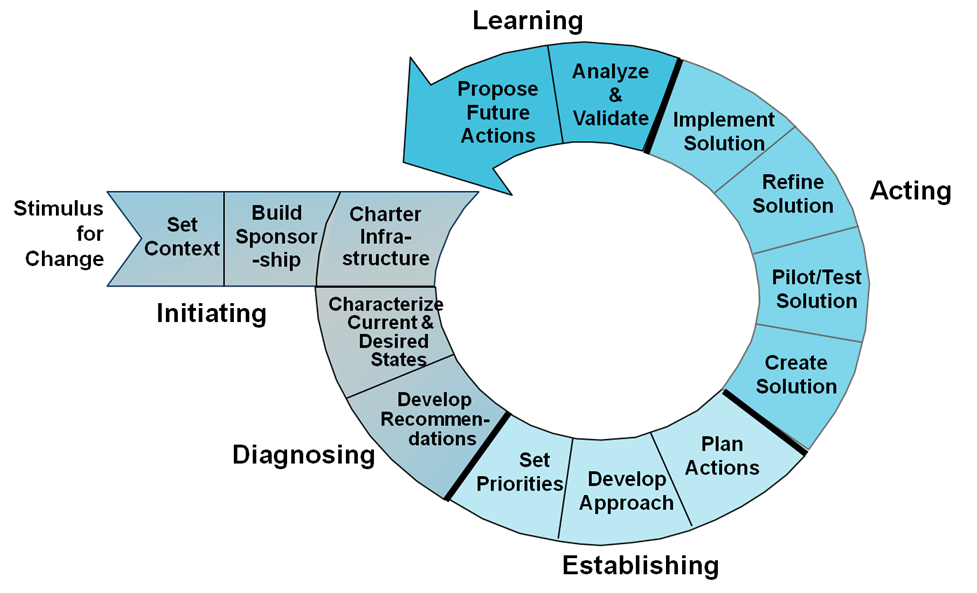

The IDEAL model, created by the Technology Adoption Architectures Team, is named after the five phases that conform it: Initiating, Diagnosing, Establishing, Acting and Learning. Additionally, these phases are further divided into fourteen activities which can be seen in the image below. It is perhaps the most flexible of all the other models mentioned above and like all the previous models, it serves as a roadmap for improvement of the company it is applied to.

The Initiating Phase Critical groundwork is completed during the initiating phase. The business reasons for undertaking the effort are clearly articulated. The effort’s contributions to business goals and objectives are identified, as are its relationships with the organization’s other work. The support of critical managers is secured, and resources are allocated on an order-of-magnitude basis. Finally, an infrastructure for managing implementation details is put in place.

The Diagnosing Phase The diagnosing phase builds upon the initiating phase to develop a more complete understanding of the improvement work. During the diagnosing phase two characterizations of the organization are developed: the current state of the organization and the desired future state. These organizational states are used to develop an approach for improving business practice.

The Establishing Phase The purpose of the establishing phase is to develop a detailed work plan. Priorities are set that reflect the recommendations made during the diagnosing phase as well as the organization’s broader operations and the constraints of its operating environment. An approach is then developed that honors and factors in the priorities. Finally, specific actions, milestones, deliverables, and responsibilities are incorporated into an action plan.

The Acting Phase The activities of the acting phase help an organization implement the work that has been conceptualized and planned in the previous three phases. These activities will typically consume more calendar time and more resources than all of the other phases combined.

The Learning Phase The learning phase completes the improvement cycle. One of the goals of the IDEAL Model is to continuously improve the ability to implement change. In the learning phase, the entire IDEAL experience is reviewed to determine what was accomplished, whether the effort accomplished the intended goals, and how the organization can implement change more effectively and/or efficiently in the future. Records must be kept throughout the IDEAL cycle with this phase in mind.

For more information about the IDEAL model you can follow this link